Best practices suck! OK, most of them don’t. I just wanted to be dramatic.

However, with best practices, you’re only halfway there. They help a lot at the start when you don’t know what to do and want to make as few mistakes as possible.

Later, it would be best if you focused on what’s best for your business. One of the best ways to find out what’s working for you is to do an A/B test. For that, you need a good testing strategy, which we will explore in this article. Unless you have a very small account, you should be running at least one test a month.

This year is almost over, and it might be a good time to start thinking about 2025.

What is A/B testing?

Google Ads testing is the same as any other testing. It’s just you have a bit fewer elements to test. However, the general idea of the test is to have two variations, usually original (A) and test (B), and have them run side by side to determine if there is any impact on the results.

Since both variations run at the same time, fewer factors can skew the results. Compared to if you do before/after comparison.

For example, here are a few elements you can test:

- Headlines and descriptions.

- Assets (callouts, sitelinks, images, etc.)

- CTAs

- Landing pages

- Bidding strategies and so on.

Benefits

- Eliminates guesswork and opinions. I’m sure you have sat at a meeting where someone says this or that is better because they “know” or whatever.

- Allows us to base decisions on data.

- Tracked improvements with minimal effort

Challenges

- Short testing periods may provide false positives.

- Low traffic and/or conversions. There might not be enough data to reach statistically significant results.

- Testing without a proper hypothesis can limit learning.

Google Ads testing framework

To implement a good test, and most importantly, to do that consistently, you need a framework. I will share a very simple yet effective framework I use on most accounts. The exception might be accounts with a very large budget, where a bit different tactics are involved. Usually, a third-party testing tool as well.

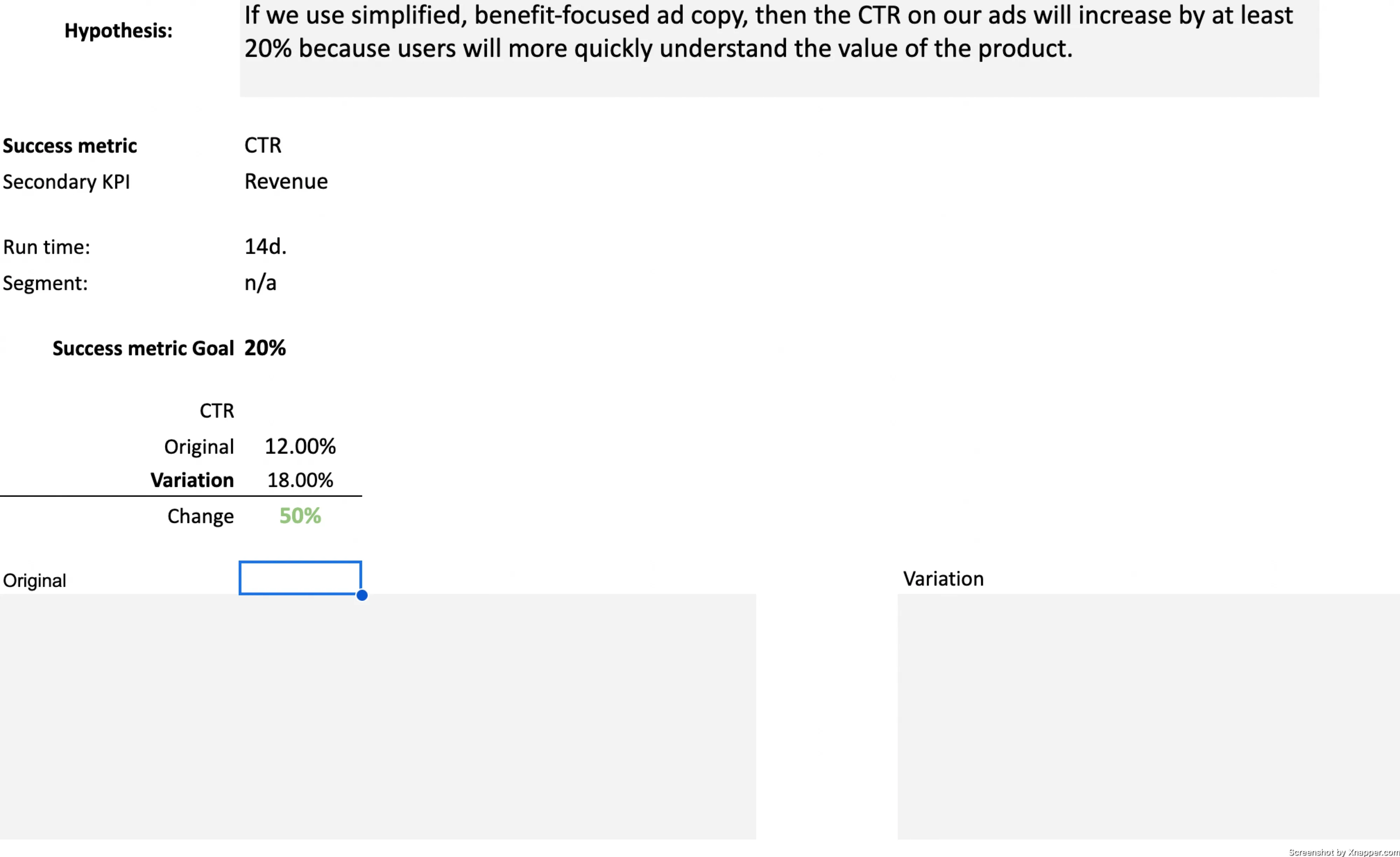

1. Write your hypothesis

It’s the first step and probably the most crucial. You need to clearly identify what is being tested and what assumptions you want to test. A good hypothesis will not only help set and choose the right metrics, but even if the test fails, it will provide additional learnings.

Keep in mind that about 8 out of 10 tests fail. Either they have inconclusive results or do not show any changes with the variation being tested.

How to write one? Use this structure:

“If [we make X change], then [Y outcome] will happen because [Z rationale].”

X = the change or variable you’re testing.

Y = the expected result or measurable outcome.

Z = the reasoning behind why you believe this outcome will occur.

For example:

“If we use simplified, benefit-focused ad copy, then the CTR on our ads will increase by at least 20% because users will more quickly understand the value of the product.”

X = the change or variable you’re testing – “simplified, benefit-drive ad copy”

Y = the expected result or measurable outcome – “the CTR on our ads will increase by at least 20%”

Z = the reasoning behind why you believe this outcome will occur – “because users will more quickly understand the value of the product.”

This structure also defines a success metric – CTR.

If the test wins, then you know what exactly caused it – benefit-driven ad copy. If it fails, you will also learn, that benefit-driven copy doesn’t impact results. So, a clear hypothesis allows you to learn no matter if the test wins or not. This is very important.

Sometimes, you might not reach your defined success metric goal (20%). In this case, if the results are statistically significant, but the CTR increased by 15%, it’s up to you to decide if you want to use new ads. Because the ad copy did have an impact, it was just not as big as you predicted. Again, learnings. Now you know that ad copy increases CTR by only 15%.

Each test should teach you something about your audience. If you don’t see any learnings after any test, always go back to your hypothesis.

2. Audience and duration

In most A/B tests, you define an audience because it ties back to learnings. Not all changes are applicable to all users. Sometimes, it will be, but other times, you will need to segment.

In Google Ads testing, we have campaigns, and that sort of defines an audience. Just make sure that if you’re testing all ads in the campaign, there aren’t any ad groups where the changes don’t make any sense. For example, women’s clothing ad group and men’s clothing ad group. Both genders can react differently to the new ads you’re testing, especially if your hypothesis is about improving CTR for women’s clothing ads. Then, the changes can have a negative impact on other ad groups.

The other thing is duration. I’ve seen so many failed campaigns because they were stopped too soon. There are a few ways you can determine test duration:

- If the test shows a winner with statistical significance, try to keep the test at least for two weeks. With a lot of conversions, this might happen, but you need to keep it longer, considering weekdays. But with fewer traffic or conversions you will probably have to test for 4 weeks.

- What is your sales cycle? If your product is purchased within 7 days, then it’s fine. However, some products or services require longer to consider before making a purchase. Try to keep the test running for a full sales cycle.

- If nothing else, run it for 4 weeks or longer and wait for statistical significance.

Why Statistical Significance Matters in Testing?

Statistical significance ensures that you’re not basing decisions on random fluctuations in data but on genuine patterns. This is the likelihood that the results observed in the test are not due to random chance. Common confidence levels are 90%, 95%, or 99%, with 95% being the most commonly used. A 95% confidence level means there’s a 95% chance that the results are reliable and only a 5% chance they happened by luck.

It gives you the confidence to implement changes that are more likely to yield repeatable, reliable outcomes.

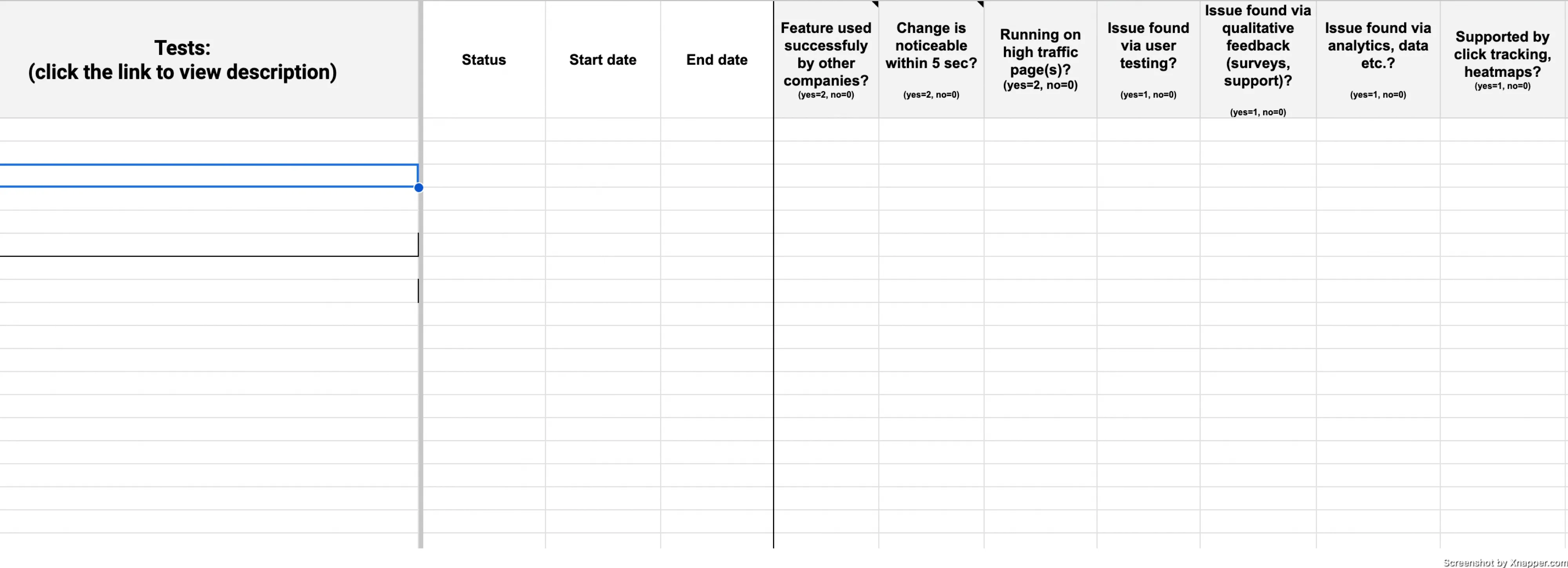

3. Document all tests in one place

This is not only for you, although it helps a lot. You are less likely to do the same tests after some time. Let’s face it: we forget things.

You don’t have to complicate this part. Just use spreadsheets and write down what we discussed above, with the hypothesis and the test results after it is finished.

You can use my simple Google Sheets template (copy it to use):

It’s pretty straightforward. You have your hypothesis, then list metrics and how they changed. You can add screenshots or texts of what is being tested. Then, you can describe the results in more detail below. Sort of like a note to yourself or to your boss.

Additionally, in the first tab, I have all the ideas I want to test. I also have some scoring of ideas, depending on how the idea impacts the results and how much effort is needed to implement the test. If you have many ideas, you will want to decide which ones should be tested first.

You can skip the scoring part for now. Just list the ideas.

Don’t skip this part. It can also be a big advantage if you will be going after a certain job. You will have all the tests you’ve done to prove it.

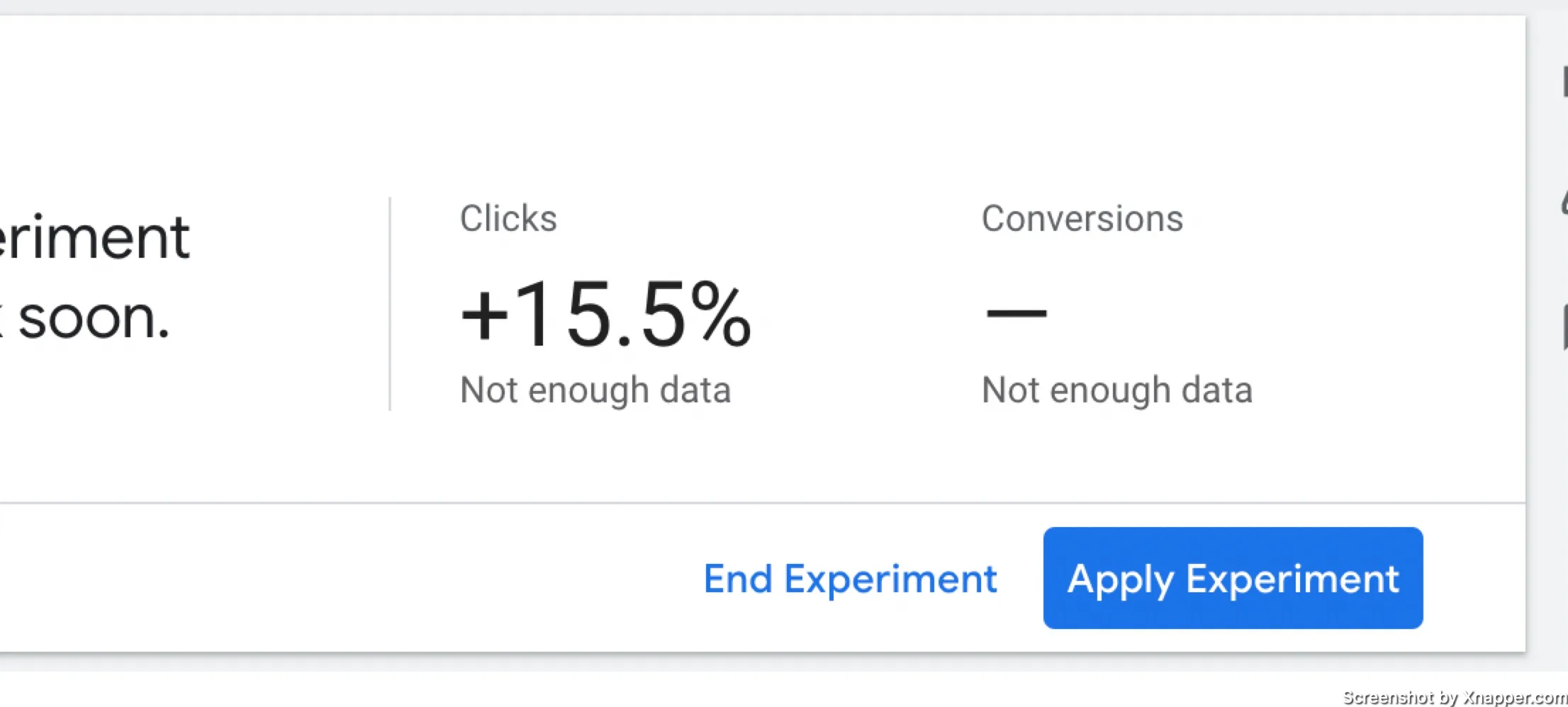

4. Setup test in Google Ads

Google Ads has a built-in testing feature called Experiments. I won’t get into too much detail here as I have a different post about experiments.

The experiment will require you to choose two metrics, but you will be able to see more after it starts. Your first metric should be your success metric, and the second can be, for example, Conversions. If you’re testing ads to improve CTR, you might want to see if additional clicks also increase conversions.

You will see these two metrics in the summary, like this:

The rest of the metrics are below. So don’t worry if you want to see more data. It will be available to you.

You will see when the results ar conclusive and if you want to apply the experiment or end it.

Usually, you just want to apply the experiment if your test variation wins. As the test campaign will have data already, it won’t be a new campaign. However, it is not mandatory. You can end the test and then implement the changes manually. Or maybe you will want to retest things.

5. Rinse and repeat

You should be testing continuously. After one test is complete, you can have another test ready. I will write some ideas for you to start with. But you should either brainstorm with your team or just put those best practices to the test.

After each test, analyze what you have learned. Sometimes, you might need to retest the same hypothesis with some adjustments.

Use learnings to refine your strategy and this framework.

Testing is not a one-time task. Regular tests will ensure that you’re running the most effective campaigns possible.

Testing ideas for Google Ads campaigns

💡Test only one element at a time. If you change too many things, you won’t be able to tell what actually had an impact on your results.

Responsive Search Ads

Everything starts with your ad, so make sure it’s the most attractive (clickable).

- Different headlines (benefits vs. features)

- Pinned vs. unpinned headlines.

- With keyword insertion and without it.

- Descriptions and description length.

- CTA: direct vs. indirect (Buy vs. Get it)

Ad Assets

It is recommended to have all applicable assets. However, you can test the content of those assets.

- Images. Emotional images vs. product images

- Sitelinks. You can test the number of sitelinks, the links and the texts in the sitelink

- Structured snippets. There are several categories you can choose. Brands vs. Categories vs. Features

- Callouts. Similarly, benefits vs. features or other attributes

Bidding strategies

I often read that people just switch strategies. It would be best if you never did that. Sure, there are exceptions, but you should always test big changes such as this.

If you’re using a manual and getting enough conversions, then test smart bidding. You can test Maximaise conversions with or without target CPA. That’s two tests already.

But remember that for smart bidding, you need a lot of conversions. Even if you pass the minimum threshold of 30, during testing, the campaign is split in half. So your smart bidding is learning only half of the conversions. Make sure you start the test with at least 200 conversions per campaign. This will reduce the learning period, and you will reach conclusive results faster.

Landing pages

We’re going beyond Google Ads account, but landing pages can be tested as well through the same Experiments feature.

Your landing page is responsible for converting users. You might have amazing ads, but if your landing page sucks, everyone will leave.

- Headlines. This is the first thing a user sees. You need to perfect it through testing.

- CTA. Both color and text matter.

- Shorter pages vs. longer pages.

- Images vs. video

- Text testimonials vs. video

- Product-heavy page or emotional

- Features vs. benefits

- Hero images. This is the main image at the top of the page, usually near the headline.

Keyword match types

Match types still matter.

- Exact vs. broad. Since broad uses more signals, test if this means more conversion for you

- Having exact terms along with broad match keywords

Performance Max tests

A lot of people have started using this type, but rarely do people test the impact.

- Uplift for PMax. Impact on existing campaigns.

- PMax vs. Search

- PMax vs. display

- PMax vs. Shopping

Now you will know if PMax ads value either directly or indirectly through other campaigns.

Video ads

Testing videos is harder as you have to produce a lot of different videos. However, the results can be rewarding.

- Longer videos vs. short

- CTA at the start vs. middle or end

- Product-oriented or emotion

- Opening hooks

- Background music

- Shorter cuts vs. longer cuts.

I’m sure you will find more ideas to test, and you will be tempted to Google for more. There is nothing wrong with getting more inspiration, but the best tests are the ones that come from your data or insights.

Observe your audience, ask for feedback, look at heatmaps or browse recordings, and talk to sales and support teams. You will have a lot of ideas related to your business.

Blogging gives me a chance to share my extensive experience with Google Ads. I hope you will find my posts useful. I try to write once a week, and you’re welcome to join my newsletter. Or we can connect on LinkedIn.